🐳Optimizing Docker Builds with Cache: A Comprehensive Guide

Unleashing the Power of Efficient Containerization

Table of contents

Introduction

Docker images are the blueprint for containers, and optimizing their build process is crucial for efficiency and speed. In this blog, we will be optimizing Docker builds with cache, a powerful technique that can significantly reduce build times and save valuable resources.

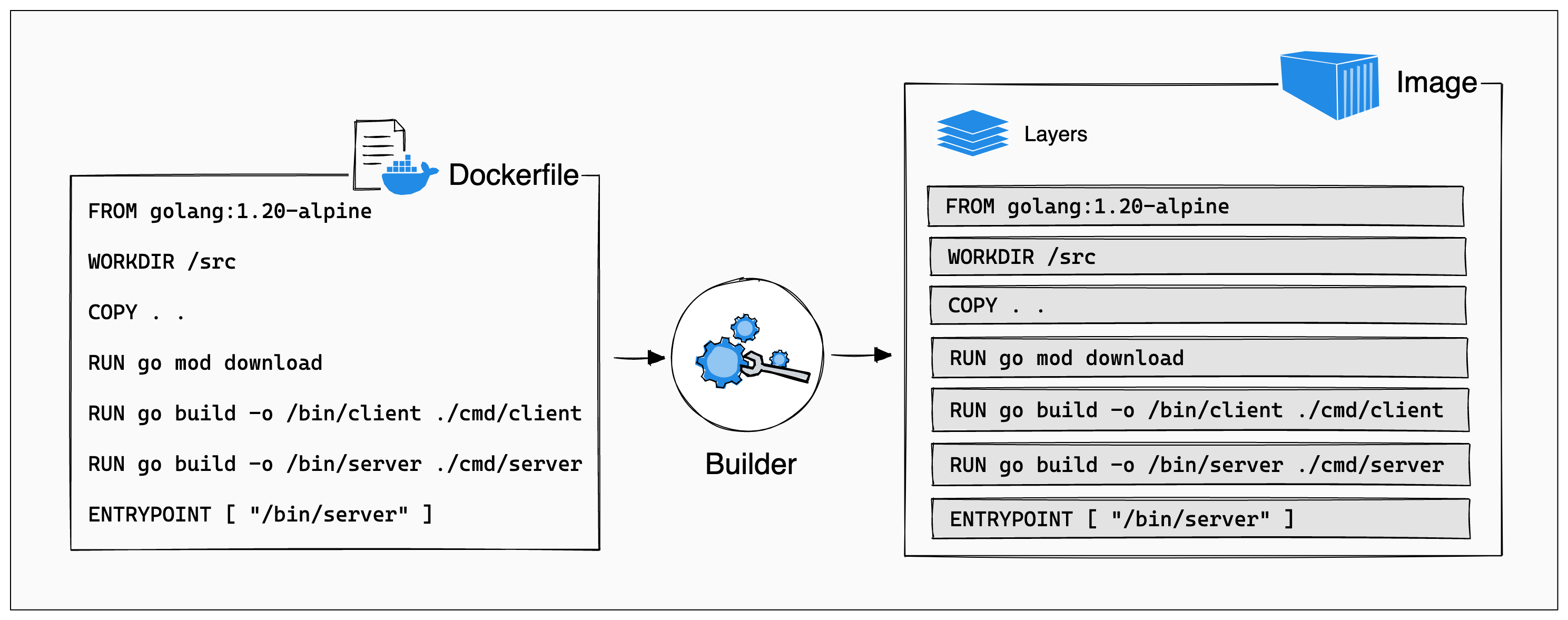

Understanding Docker Build Cache

When you build a Docker image, Docker uses a caching mechanism to speed up the process. Each instruction in a Dockerfile creates a layer, and these layers are cached. (stored for future use) If a layer's instructions haven't changed since the last build, Docker can reuse the cached layer instead of rebuilding it. This is where the Docker build cache comes into play.

Here's how Docker's caching mechanism works:

Docker inspects the Dockerfile for changes.

If there are changes, it invalidates the cache for that specific instruction and all subsequent instructions.

If there are no changes, Docker uses the cached layers up to that point.

This process continues until the end of the Dockerfile, allowing for faster builds when possible.

Now that we understand how Docker's build cache works, let's explore strategies for optimizing Docker builds using cache effectively:

Layer Ordering: Arrange your Dockerfile instructions wisely. Instructions that change frequently (e.g., copying application code) should come later in the Dockerfile, while more stable dependencies (e.g., installing system packages) should come earlier. This way, changes are less likely to invalidate the cache for layers that change infrequently.

Use Multi-Stage Builds: Multi-stage builds allow you to use multiple Docker images in a single Dockerfile. You can copy only the necessary artifacts from one stage to another, minimizing the number of layers that might be invalidated. This is particularly useful for compiling code and creating lightweight runtime images.

Leverage .dockerignore: Use a

.dockerignorefile to exclude unnecessary files from the build context. By reducing the size of the build context, you can improve build performance. Smaller build contexts lead to quicker cache comparisons.Cache Dependencies Separately: If your application has dependencies, consider caching them separately. For example, if you use Node.js, you can first copy your package.json and install dependencies before copying the application code. This way, changes to your code won't trigger a cache invalidation for the dependencies.

Prune Unused Resources: Periodically clean up your Docker environment using

docker system prune. This removes dangling images, which can consume disk space and affect cache performance.

Let's walk through an example of optimizing a Docker build with cache using a simplified Node.js application.

Suppose you have a Node.js application with the following directory structure:

my-node-app/

|-- Dockerfile

|-- package.json

|-- package-lock.json

|-- app.js

Dockerfile for building this Node.js application:

# Use an official Node.js runtime as the base image

FROM node:14

# Set the working directory in the container

WORKDIR /usr/src/app

# Copy the application source code

COPY . .

# Install application dependencies

RUN npm install

# Expose a port

EXPOSE 8080

# Start the Node.js application

CMD ["node", "app.js"]

This Dockerfile is rather inefficient. Updating any file causes a reinstall of all dependencies every time you build the Docker image even if the dependencies didn't change since last time!

Instead, the COPY command can be split in two. First, copy over the package management files (in this case, package.json and package-lock.json). Then, install the dependencies. Finally, copy over the project source code, which is subject to frequent change.

# Use an official Node.js runtime as the base image

FROM node:14

# Set the working directory in the container

WORKDIR /usr/src/app

# Copy package.json and package-lock.json to the container

COPY package*.json ./

# Install application dependencies

RUN npm install

# Copy the rest of the application source code

COPY . .

# Expose a port

EXPOSE 8080

# Start the Node.js application

CMD ["node", "app.js"]

During the first build, all instructions run, and the cache is populated. And then in every build, Suppose you make changes to app.js, but the package.json and package-lock.json files remain the same. Since the dependency-related layers weren't affected by code changes, Docker reuses the cached layers up to those instructions. This significantly speeds up the build process.

Conclusion

That is it for our exploration of Docker layers and optimizing the build process using cache. I hope you find it useful.